I consider creating and managing content databases a two part excercise. First, design for availability. Second, design for performance. Before I move forward, consider the following 2 points:

1. Service Level Agreements (SLAs). How long can your site collections be out of service? When designing your site collections it is important to remember that site collections are contained in content databases, and cannot span content databases. Consider the following when designing for site collection availability:

Recovery Time Objective - The recovery time objective (RTO) defines how long your system can be down before it is back online after a disruption. The disruption could be due to anything from a SQL Server outage to a WFE Server failure. The RTO should include data recovery at the server, farm, database, site, list, and item levels.

Recovery Point Objective - The recovery point objective (RPO) defines your data loss threshold, measured in time. If you run daily backups only and ignore the SQL Server transaction logs, then your RPO is 23 hours, 59 minutes, and 59 seconds. Any data written to SharePoint Server 2007 after you ran the backup cannot be restored via native tools until after the next backup. Many organizations assume this risk without fully understanding the impact of losing 24 hours worth of data.

2. Performance. You don't have to host all content databases on the same disks in your SQL Server. In fact, you don't even have to host them on the same SQL instance! For very large and/or busy site collections, you can host them on very fast disk using RAID 1+0 or 0+1 (depends on your speed vs. availability). You could then host the more generic site collections on less expensive configurations/disks, and assume more risk.

So, how do we begin our design? I would begin by calculating both the A. size and B. performance levels required. If you have a very fast site collection that is mostly read (think WCM / Publishing Site), then you need to optimize the data files on your SQL Server. That means a config like RAID 0+1 for super speed, or RAID 1+0 for more availability and decent speed. Your transaction logs won't matter as much. But, if you are designing for a highly-collaborative environment, then you probably want to optimize your transaction log files.

Microsoft often states that 100GB is the recommended maximum for a content database. This is mainly because small to medium shops may have difficulty maintaining, backing up, and restoring large content databases. But, let's be honest: You can't span content databases, so you are accepting a maximum Site Collection size of 100GB (or less if you count the 2nd stage recycle bin). Really? 100GB doesn't seem very big anymore :) If you need larger site collections, optimize SQL and rock on...

There are some performance hits with large site collections, but these usually aren't that bad. Just be sure to test, test, test. Visual Studio works great for stress testing your site collection performance.

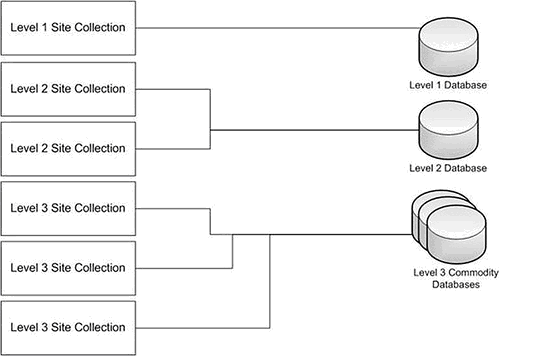

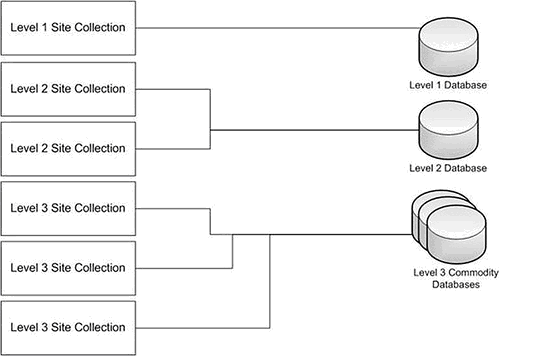

We also need to think through our SLAs when designing content databases/site collections. The two are inseparable and are designed simultaneously. SharePoint Server 2007 can adapt to a multi-tiered SLA arrangement at the site collection/content database levels. If you grouped site collections by their criticality in corresponding content databases, you can then use SQL Server tools to manage them to different support levels. The below picture shows a possible database design for hosting three different SLA levels within a single farm.

If you group site collections similar to what is shown in the above picture, then you can manage them accordingly. Level 1 site collections could have frequent SQL level backups and be mirrored to another SQL instance. Level 2 site collections might be transaction log shipped, and Level 3 site collections might be in simple recovery mode and backed up only once a day. Additionally, every level of content database could be on a different SQL Server instance, and on different disk subsystems. Note that this depicts a one-to-one relationship between a Level 1 site collection and a Level 1 content database. While this provides robust recovery and performance options, it does not scale well. (Microsoft recommends no more than 100 content databases per Web application)

Note: If you have already over-loaded and over-populated your content databases, check out Todd Klindt's blog here to learn how to move them around. (nice post, Todd!) Another best practice is to create multiple content databases to support multiple site collections. A very common mistake we see is customers creating all site collections in a single content database. This usually results from a lack of understanding about how the product should be architected and partially from the process of rapid deployments. All is not lost, however, if you have implemented this way. I feel obligated to explain an almost always misunderstood Central Administration interface. The first picture below shows a screen in Central Administration Application Management Content Databases to take a database offline. The second picture shows the status of the database as stopped after it has been taken offline.

These settings do not mean what they appear to mean. Taking a database offline merely blocks any new site collections from being created in it. It does not take the database offline as one might think. Users can still upload and download content, view Web pages, and process workflows. The content database status will also show as stopped. Once again, this means only that new site collections cannot be created in this database. If you want a one-to-one site collection to content database association, taking the hosting database offline is the best method to accomplish this.